As Data Science is the sexiest job ever liveth on earth, The what-to-know remains a shadow being chased by scholars and voracious seekers of knowledge. Amidst loads of buzzwords outside there, machine learning and its techniques are the first that needs to be demystified, Out of the deep blue sea of what-to-know, I decided to pick on Precision and Recall.

These two terms are one of the metrics for classification reports, others are the likes of f1-score, accuracy, and so on.

Let’s do the definition as it is:

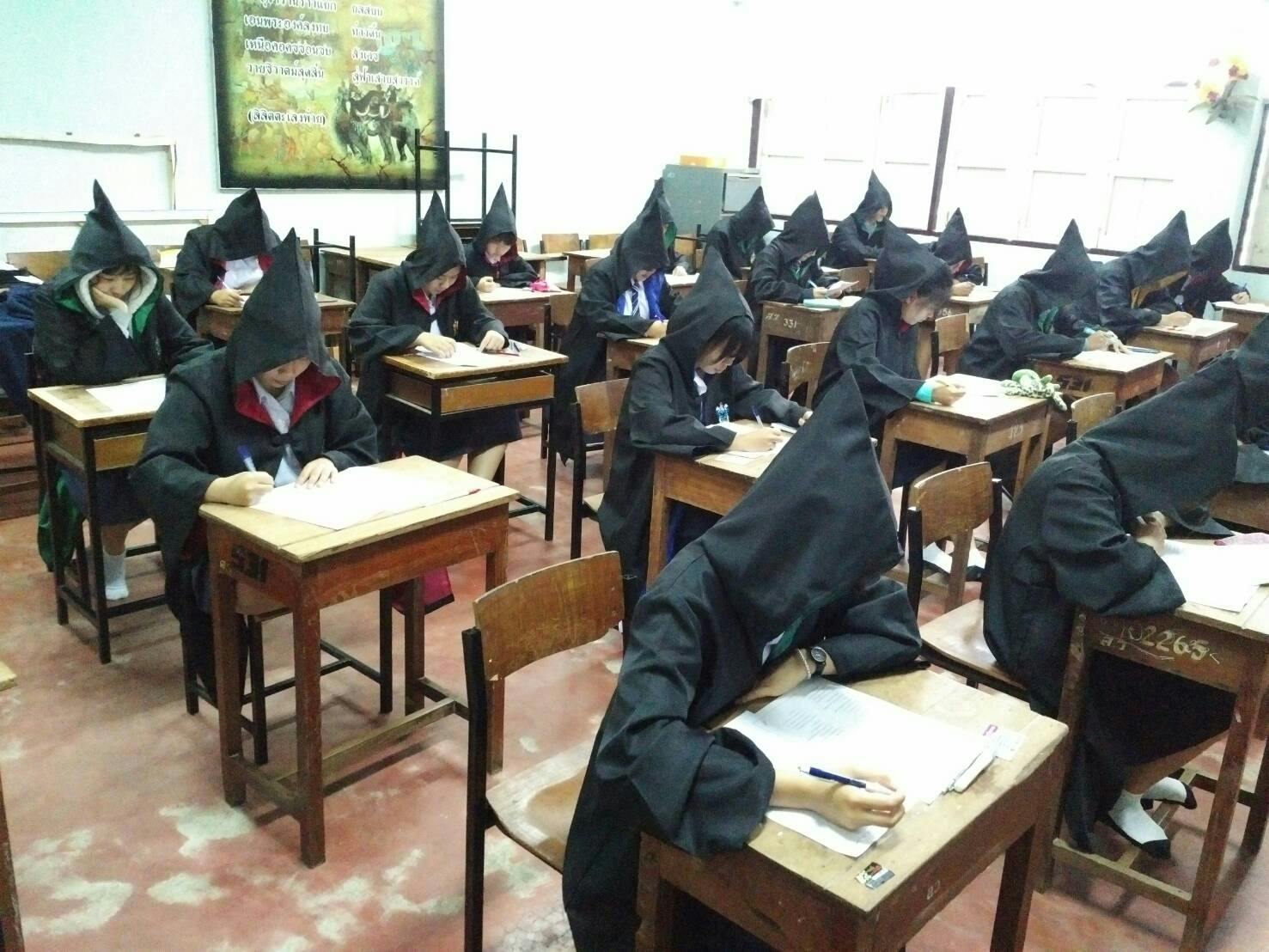

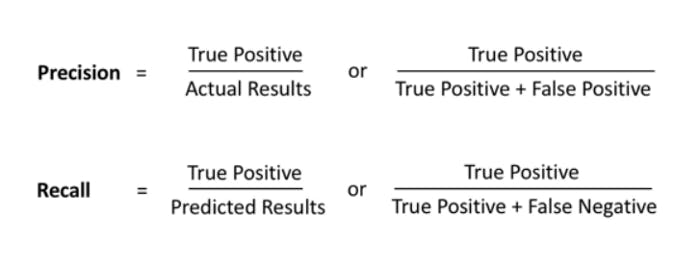

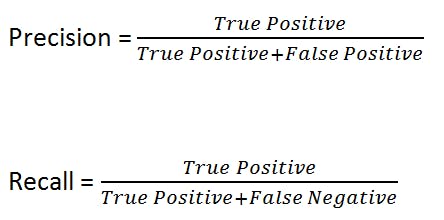

Precision is the percentage of our results that are relevant. The recall is the percentage of total relevant results correctly classified by your model. To further shed light on it: Let’s assume in a class at Hogwarts, there are 30 students, 15 girls, and 15 boys. Their names were written and shuffled in a basket. You as Prof. Dumbledor, the principal at Hogwarts, called upon a wizard, who is going to be hired for a new sorcery class. The task assigned to him is to sort out the names of the 30 students in the class mentioned above into boys or girls, he has to classify all names at random checking the spiritual features of the paper for his classification.

The wizard stepped out, dipped his wand into the basket, rustled all papers together with his wand and his magical power, and controlled the papers into two empty baskets labeled ‘BOY’ and ‘GIRL’ respectively.

After the sorting process, the Principal reached for the baskets and checked the basket containing the girls first.

There are two labels in the class, The Boys and The Girls.

PRECISION

*For class Girl *

The principal discovered that for the girl's basket, the wizard sorted 20 papers into it. Out of the 20 predictions, there are 12 names predicted correctly as girls. Since precision is all about the relevance of our result, the precision of the wizard for the girl class will be 12/20.

The precision is 60%.

The truly predicted is divided by all predictions.

For class Boys

The principal discovered that for the boy's basket, the wizard sorted 10 papers in it. Out of the 10 predictions, there are 8 names predicted correctly as boys. Since precision is all about the relevance of our result, the precision of the wizard for the boy's class will be 8/10.

The precision is 80%.

The truly predicted is divided by all predictions.

RECALL

For class Girl

Everyone except the wizard knows that there are 15 girls in that class, but out of a possible 15, he was able to predict 12 correctly, that’s telling us that his magical prowess was only able to recall 12 out of a possible 15. His recall is therefore 12/15 for the girl class.

The recall is 80%

For class Boy

Everyone except the wizard knows that there are 15 boys in that class, but out of a possible 15, he was able to predict 8 correctly, that’s telling us that his magical prowess was only able to recall 8 out of a possible 15. His recall is therefore 8/15 for the boy class.

The recall is 53%

Now think of the Principal as you, The wizard as the machine learning model you've built for the classification task, and the students are your data sets.

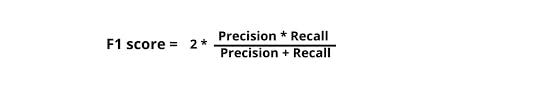

The two terms can be used to generate our f1-score, f1-score can be defined as the harmonic mean of precision and recall.

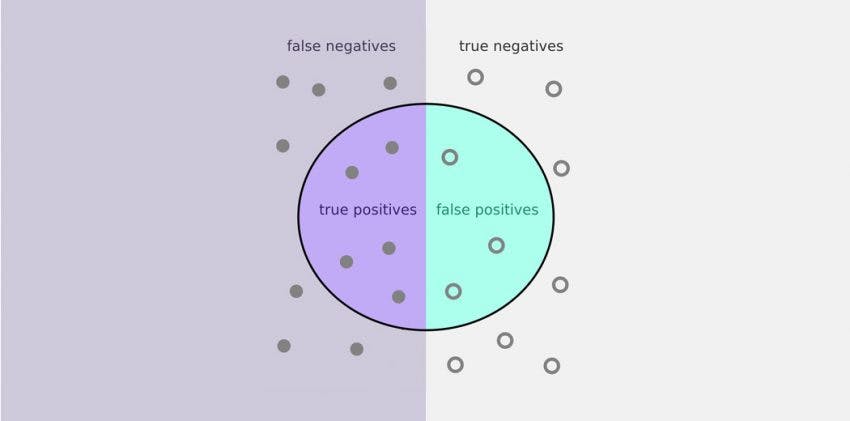

What are the true positives, true negatives, false positives, and false negatives?

True positives: when you predict an observation belongs to a class and it actually does belong to that class.

True negatives: when you predict an observation does not belong to a class and it actually does not belong to that class.

False positives: occur when you predict an observation belongs to a class when in reality it does not.

False negatives: occur when you predict an observation does not belong to a class when in reality it does.

Feel free to use the comment box for your contributions or corrections, The writer is still a learner too. Thanks for reading.